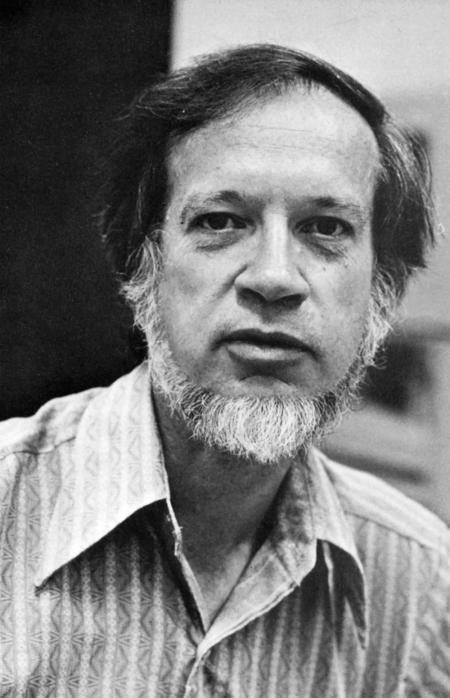

Frederick Burtis Thompson, professor of applied philosophy and computer science, emeritus, passed away on May 27, 2014. The research that Thompson began in the 1960s helped pave the way for today's "expert systems" such as IBM's supercomputer Jeopardy! champ Watson and the interactive databases used in the medical profession. His work provided quick and easy access to the information stored in such systems by teaching the computer to understand human language, rather than forcing the casual user to learn a programming language.

Indeed, Caltech's Engineering & Science magazine reported in 1981 that "Thompson predicts that within a decade a typical professional [by which he meant plumbers as well as doctors] will carry a pocket computer capable of communication in natural language."

"Natural language," otherwise known as everyday English, is rife with ambiguity. As Thompson noted in that same article, "Surgical reports, for instance, usually end with the statement that 'the patient left the operating room in good condition.' While doctors would understand that the phrase refers to the person's condition, some of us might imagine the poor patient wielding a broom to clean up."

Thompson cut through these ambiguities by paring "natural" English down to "formal" sublanguages that applied only to finite bodies of knowledge. While a typical native-born English speaker knows the meanings of 20,000 to 50,000 words, Thompson realized that very few of these words are actually used in any given situation. Instead, we constantly shift between sublanguages—sometimes from minute to minute—as we interact with other people.

Thompson's computer-compatible sublanguages had vocabularies of a few thousand words—some of which might be associated with pictures, audio files, or even video clips—and a simple grammar with a few dozen rules. In the plumber's case, this language might contain the names and functions of pipe fittings, vendors' catalogs, maps of the city's water and sewer systems, sets of architectural drawings, and the building code. So, for example, a plumber at a job site could type "I need a ¾ to ½ brass elbow at 315 South Hill Avenue," and, after some back-and-forth to clarify the details (such as threaded versus soldered, or a 90-degree elbow versus a 45), the computer would place the order and give the plumber directions to the store.

Born on July 26, 1922, Thompson served in the Army and worked at Douglas Aircraft during World War II before earning bachelor's and master's degrees in mathematics at UCLA in 1946 and 1947, respectively. He then moved to UC Berkeley to work with logician Alfred Tarski, whose mathematical definitions of "truth" in formal languages would set the course of Thompson's later career.

On getting his PhD in 1951, Thompson joined the RAND (Research ANd Development) Corporation, a "think tank" created within Douglas Aircraft during the war and subsequently spun off as an independent organization. It was the dawn of the computer age—UNIVAC, the first commercial general-purpose electronic data-processing system, went on sale that same year. Unlike previous machines built to perform specific calculations, UNIVAC ran programs written by its users. Initially, these programs were limited to simple statistical analyses; for example, the first UNIVAC was bought by the U.S. Census Bureau. Thompson pioneered a process called "discrete event simulation" that modeled complex phenomena by breaking them down into sequences of simple actions that happened in specified order, both within each sequence and in relation to actions in other, parallel sequences.

Thompson also helped model a thermonuclear attack on America's major cities in order to help devise an emergency services plan. According to Philip Neches (BS '73, MS '77, PhD '83), a Caltech trustee and one of Thompson's students, "When the team developed their answer, Fred was in tears: the destruction would be so devastating that no services would survive, even if a few people did. . . . This kind of hard-headed analysis eventually led policy makers to a simple conclusion: the only way to win a nuclear war is to never have one." Refined versions of these models were used in 2010 to optimize the deployment of medical teams in the wake of the magnitude-7.0 Haiti earthquake, according to Neches. "The models treated the doctors and supplies as the bombs, and calculated the number of people affected," he explains. "Life has its ironies, and Fred would be the first to appreciate them."

In 1957, Thompson joined General Electric Corporation's computer department. By 1960 he was working at GE's TEMPO (TEchnical Military Planning Operation) in Santa Barbara, where his natural-language research began. "Fred's first effort to teach English to a computer was a system called DEACON [for Direct English Access and CONtrol], developed in the early 1960s," says Neches.

Thompson arrived at Caltech in 1965 with a joint professorship in engineering and the humanities. "He advised the computer club as a canny way to recruit a small but dedicated cadre of students to work with him," Neches recalls. In 1969, Thompson began a lifelong collaboration with Bozena Dostert, a senior research fellow in linguistics who died in 2002. The collaboration was personal as well as professional; their wedding was the second marriage for each.

Although Thompson's and Dostert's work was grounded in linguistic theory, they moved beyond the traditional classification of words into parts of speech to incorporate an operational approach similar to computer languages such as FORTRAN. And thus they created REL, for Rapidly Extensible Language. REL's data structure was based on "objects" that not only described an item or action but allowed the user to specify the interval for which the description applied. For example:

Object: Mary Ann Summers

Attribute: driver's license

Value: yes

Start time: 1964

End time: current

"This foreshadowed today's semantic web representations," according to Peter Szolovits (BS '70, PhD '75), another of Thompson's students.

In a uniquely experimental approach, the Thompsons tested REL on complex optimization problems such as figuring out how to load a fleet of freighters—making sure the combined volumes of the assorted cargoes didn't exceed the capacities of the holds, distributing the weights evenly fore and aft, planning the most efficient itineraries, and so forth. Volunteers worked through various strategies by typing questions and commands into the computer. The records of these human-computer interactions were compared to transcripts of control sessions in which pairs of students attacked the same problem over a stack of paperwork face-to-face or by communicating with each other from separate locations via teletype machines. Statistical analysis of hundreds of hours' worth of seemingly unstructured dialogues teased out hidden patterns. These patterns included a five-to-one ratio between complete sentences—which had a remarkably invariant average length of seven words—and three-word sentence fragments. Similar patterns are heard today in the clipped cadences of the countdown to a rocket launch.

The "extensible" in REL referred to the ease with which new knowledge bases—vocabulary lists and the relationships between their entries—could be added. In the 1980s, the Thompsons extended REL to POL, for Problem Oriented Language, which had the ability to work out the meanings of words not in its vocabulary as well as coping with such human frailties as poor spelling, bad grammar, and errant punctuation—all on a high-end desktop computer at a time when other natural-language processors ran on room-sized mainframe machines.

"Fred taught both the most theoretical and the most practical computer science courses at the Institute long before Caltech had a formal computer science department. In his theory class, students proved the equivalence of a computable function to a recursive language to a Turing machine. In his data analysis class, students got their first appreciation of the growing power of the computer to handle volumes of data in novel and interesting ways," Neches says. "Fred and his students pioneered the arena of 'Big Data' more than 50 years ahead of the pack." Thompson co-founded Caltech's official computer science program along with professors Carver Mead (BS '56, MS '57, PhD '60) and Ivan Sutherland (MS '60) in 1976.

Adds Remy Sanouillet (MS '82, PhD '94), Thompson's last graduate student, "In terms of vision, Fred 'invented' the Internet well before Al Gore did. He saw, really saw, that we would be asking computers questions that could only be answered by fetching pieces of information stored on servers all over the world, putting the pieces together, and presenting the result in a universally comprehensible format that we now call HTML."

Thompson was a member of the scientific honorary society Sigma Xi, the Association for Symbolic Logic, and the Association for Computing Machinery. He wrote or coauthored more than 40 unclassified papers—and an unknown number of classified ones.

Thompson is survived by his first wife, Margaret Schnell Thompson, and his third wife, Carmen Edmond-Thompson; two children by his first marriage, Mary Ann Thompson Arildsen and Scott Thompson; and four grandchildren.

Plans for a celebration of Thompson's life are pending.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.